Which GPU(s) to Get for Deep Learning: My Experience and Advice for Using GPUs in Deep Learning | Analytics & IIoT

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science

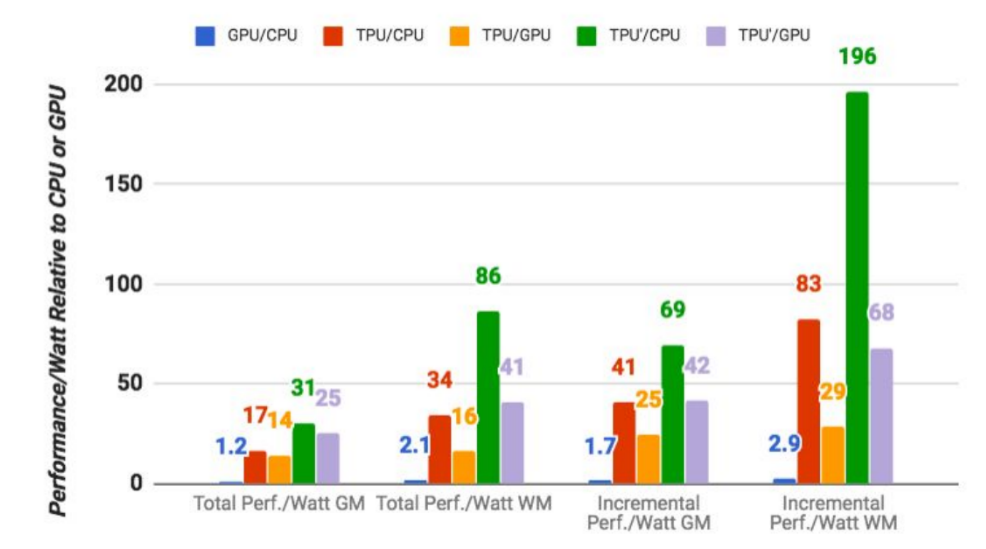

Google says its custom machine learning chips are often 15-30x faster than GPUs and CPUs | TechCrunch

![D] Which GPU(s) to Get for Deep Learning: My Experience and Advice for Using GPUs in Deep Learning : r/MachineLearning D] Which GPU(s) to Get for Deep Learning: My Experience and Advice for Using GPUs in Deep Learning : r/MachineLearning](https://external-preview.redd.it/ESD1BhcbiOwKLuPetUl_hjdOaknbwN1A6tjkxBMHCXY.jpg?width=640&crop=smart&auto=webp&s=89f3e68b690f7a4642b8cd71b924503e5011310d)

D] Which GPU(s) to Get for Deep Learning: My Experience and Advice for Using GPUs in Deep Learning : r/MachineLearning

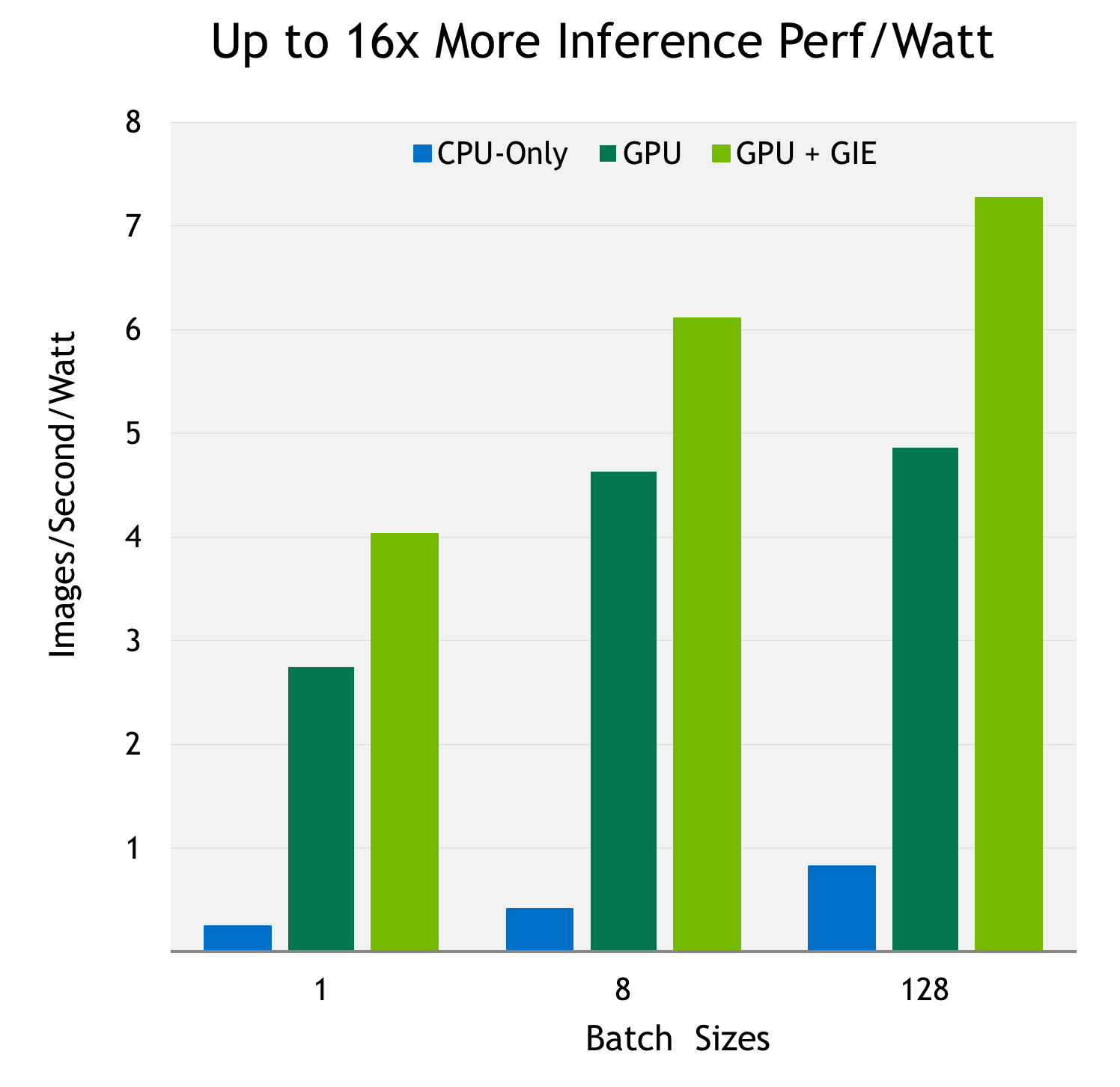

PNY Pro Tip #01: Benchmark for Deep Learning using NVIDIA GPU Cloud and Tensorflow (Part 1) - PNY NEWS

![D] Which GPU(s) to get for Deep Learning (Updated for RTX 3000 Series) : r/ MachineLearning D] Which GPU(s) to get for Deep Learning (Updated for RTX 3000 Series) : r/ MachineLearning](https://external-preview.redd.it/5u8jDdRCaP-A20wEDShn0DFiQIgr2DG_TGcnakMs6i4.jpg?auto=webp&s=25a683283f0d0a367ff1476983de4b134c72d57f)